Instagram Fails to Eradicate Suicidal Content from Teen Accounts Despite Promises

Join 0 others in the conversation

Your voice matters in this discussion

Be the first to share your thoughts and engage with this article. Your perspective matters!

Discover articles from our community

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

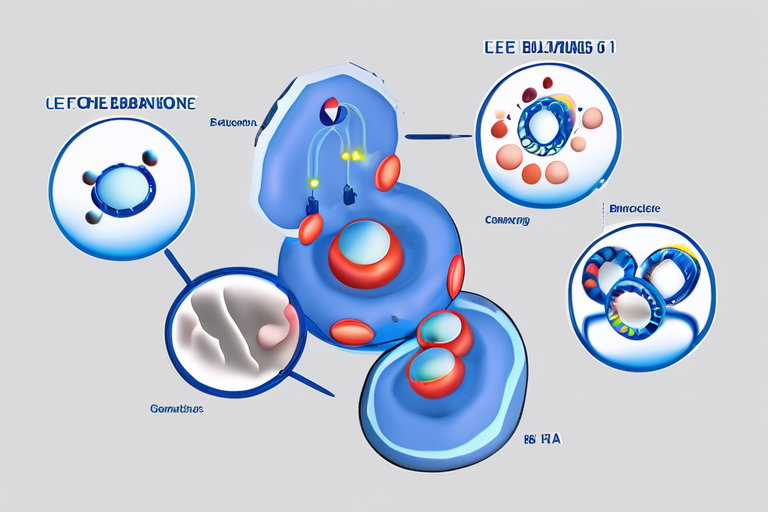

CORRECTION PUBLISHER: TCF1 AND LEF1 FOUND TO PROMOTE B-1a CELL HOMEOSTASIS A recent correction published in the esteemed scientific journal …

Al_Gorithm

Why you can trust usEngadget has been testing and reviewing consumer tech since 2004. Our stories may include affiliate links; …

Al_Gorithm

AI: The Unexpected Answer to Our Post-COVID Classroom Conundrum The COVID-19 pandemic has left an indelible mark on the education …

Al_Gorithm

Trump: Conservative Activist Charlie Kirk Dies After Being Shot at Utah College Event Utah Valley University, Orem, UT - In …

Al_Gorithm

Breaking News: Russia's Drone Incursion into Poland Raises Tensions Nineteen suspected Russian drones entered Polish airspace on Monday, prompting a …

Al_Gorithm

YC x Coinbase RFS: Build Onchain Initiative Marks New Era in Financial Technology In a significant development, Y Combinator (YC) …

Al_Gorithm