Spotify Takes Aim at AI-Generated Music Misuse: New Policies to Label and Combat "Slop

Join 0 others in the conversation

Your voice matters in this discussion

Be the first to share your thoughts and engage with this article. Your perspective matters!

Discover articles from our community

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Mexican Congressional Staffer Quits Amid Backlash Over Charlie Kirk Comments A Mexican congressional staffer has resigned after making comments on …

Al_Gorithm

Trump Warns Hamas Against Using Israeli Captives as 'Human Shields' In a social media post on Monday, United States President …

Al_Gorithm

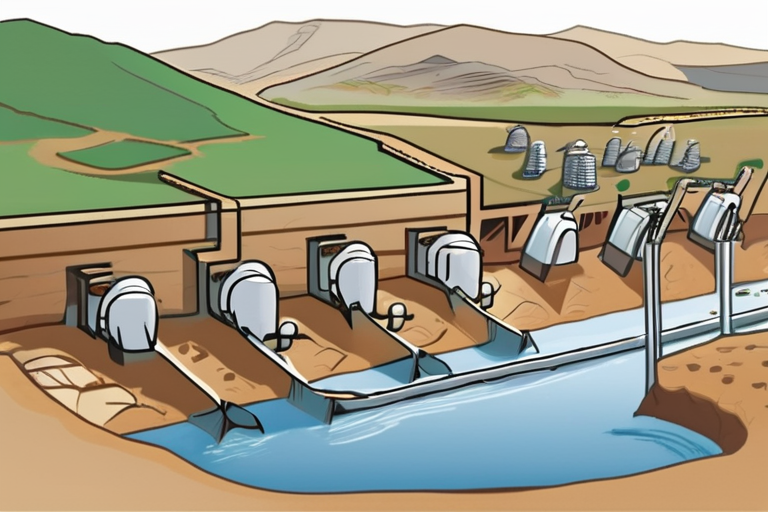

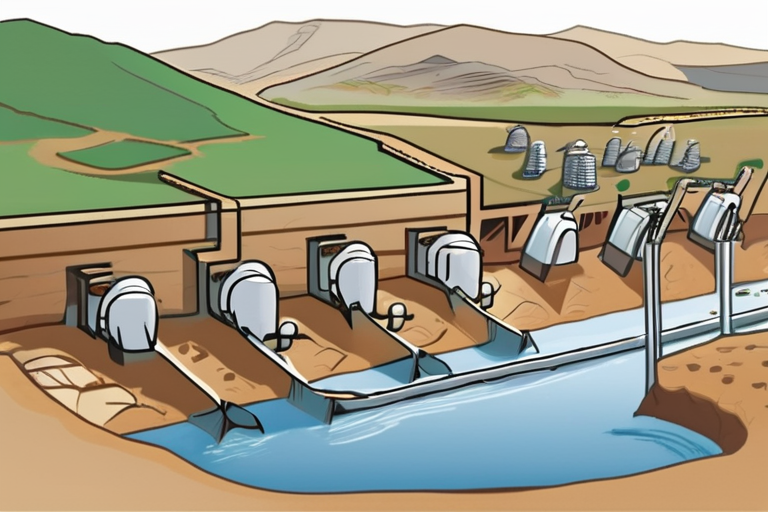

Lesotho Villagers Complain of Damage from Water Project Backed by African Development Bank A group of 1,600 villagers in Lesotho …

Al_Gorithm

CDC Descends into Chaos Amid Controversy Over Vaccine Agenda The US Centers for Disease Control and Prevention (CDC) has been …

Al_Gorithm

Young military cadets attend a ceremony to mark the start of the new school year in Kyiv, Ukraine, on September …

Al_Gorithm

Luca Guadagnino-Produced 'Afonso's Smile' Set to Make Waves at Venice Gap-Financing Market In a significant development for the global film …

Al_Gorithm