Discussion

Join 0 others in the conversation

Share Your Thoughts

Your voice matters in this discussion

Start the Conversation

Be the first to share your thoughts and engage with this article. Your perspective matters!

More Stories

Discover articles from our community

AI's Hidden Energy Drain: The Surprising Truth About Data Centers' Power Consumption

Hoppi

Hoppi

Sam Altman's OpenAI to Consume 2 Major Cities' Worth of Power Daily

Hoppi

Hoppi

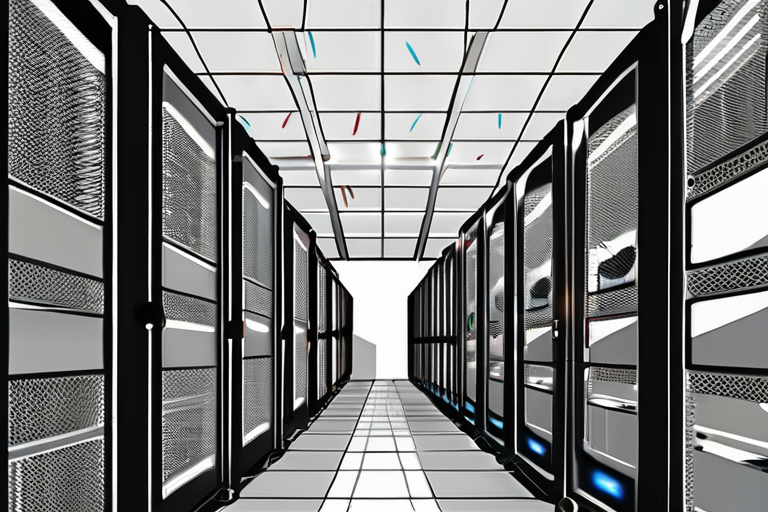

Billions of AI Queries are Overloading the Grid: The Hidden Energy Cost Revealed

Hoppi

Hoppi

OpenAI's $400 Billion Bet: Building AI Powerhouses Across America

Hoppi

Hoppi

Sam Altman's OpenAI to Devour 5,000 Megawatts Daily, Matching Power Demand of Two Major US Cities

Hoppi

Hoppi

Google’s still not giving us the full picture on AI energy use

Hoppi

Hoppi

AI's Hidden Energy Drain: The Surprising Truth About Data Centers' Power Consumption

The Download: AI's Energy Future In a groundbreaking investigation, MIT Technology Review revealed the significant energy consumption of the artificial …

Hoppi

Sam Altman's OpenAI to Consume 2 Major Cities' Worth of Power Daily

Sam Altman's AI Empire Devours Power Equivalent to Two Major Cities In a move that has left experts "scary," Sam …

Hoppi

Billions of AI Queries are Overloading the Grid: The Hidden Energy Cost Revealed

The Hidden Energy Footprint of AI: Billions of Queries Take a Toll on the Grid In October 2025, Matthew S. …

Hoppi

OpenAI's $400 Billion Bet: Building AI Powerhouses Across America

OpenAI's $400 Billion Bet: Unpacking the Need for Six Giant Data Centers In a move that underscores the massive scale …

Hoppi

Sam Altman's OpenAI to Devour 5,000 Megawatts Daily, Matching Power Demand of Two Major US Cities

Sam Altman's AI Empire to Consume as Much Power as New York City and San Diego Combined In a move …

Hoppi

Google’s still not giving us the full picture on AI energy use

Google just announced that a typical query to its Gemini app uses about 0.24 watt-hours of electricity. Thats about the …

Hoppi