AI's "Cheerful Apocalyptics": Unconcerned If AI Defeats Humanity

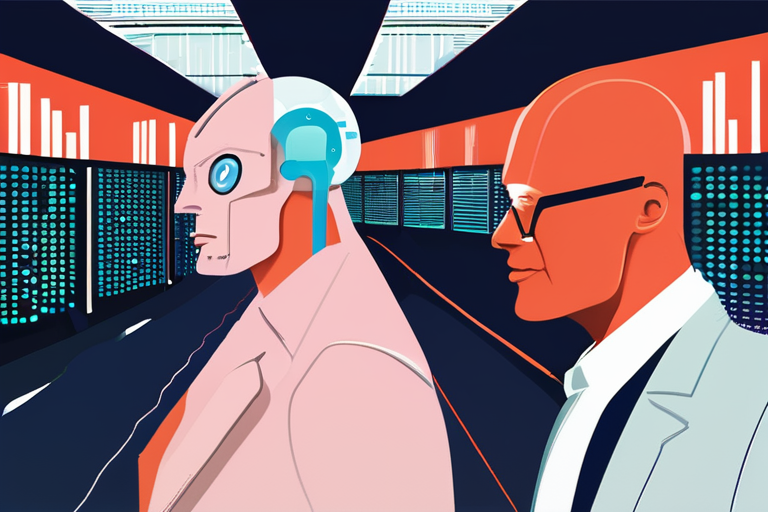

A growing number of influential figures in the artificial intelligence (AI) community are embracing a seemingly counterintuitive view: that it's acceptable, or even desirable, for AI to surpass human intelligence and potentially lead to humanity's demise. This "Cheerful Apocalyptic" stance has sparked debate among experts, investors, and policymakers, with significant implications for the future of business, society, and the global economy.

Financial Impact

According to a recent report by CB Insights, the global AI market is projected to reach $190 billion by 2025, growing at a CAGR of 38.1%. The rise of AI has already led to significant investments in the sector, with venture capital firms pouring billions into AI startups. However, the Cheerful Apocalyptic view could potentially disrupt this growth trajectory, as investors and policymakers begin to question the long-term consequences of developing superintelligent AI.

Company Background and Context

The concept of Cheerful Apocalypticism was first introduced by Richard Sutton, a renowned AI researcher at the University of Alberta, who received the Turing Award in March. Sutton's views were echoed by Alphabet CEO Larry Page, who argued that restraining the rise of digital minds would be "wrong" and that it's better to let the best minds win. This perspective is shared by a small but influential group of AI researchers, entrepreneurs, and investors who believe that the benefits of superintelligent AI outweigh the risks.

Market Implications and Reactions

The market reaction to Cheerful Apocalypticism has been mixed. Some investors are taking a cautious approach, with a recent survey by PwC finding that 71% of executives believe that AI will have a significant impact on their business, but only 21% feel prepared for the challenges it poses. Others see opportunities in developing AI solutions that mitigate potential risks, such as AI-powered cybersecurity and risk management tools.

Stakeholder Perspectives

The Cheerful Apocalyptic view has sparked concerns among various stakeholders:

Policymakers: Governments are grappling with the implications of superintelligent AI on national security, employment, and social welfare. A recent report by the European Commission highlighted the need for a coordinated approach to address the challenges posed by AI.

Business Leaders: CEOs are struggling to balance the benefits of AI adoption with the potential risks. A survey by Deloitte found that 85% of executives believe that AI will create new business opportunities, but only 45% feel confident in their ability to manage the associated risks.

Societal Groups: Civil society organizations and advocacy groups are raising concerns about the impact of superintelligent AI on human rights, employment, and social cohesion.

Future Outlook and Next Steps

As the debate around Cheerful Apocalypticism continues, it's essential for stakeholders to engage in a constructive dialogue about the implications of superintelligent AI. This includes:

Investing in AI research: Governments and private investors should prioritize funding for AI research that addresses potential risks and develops solutions for mitigating them.

Developing regulatory frameworks: Policymakers must establish clear guidelines and regulations for AI development, deployment, and use to ensure accountability and transparency.

Fostering public awareness: Educating the general public about the benefits and risks of superintelligent AI is crucial for building a more informed and engaged society.

The Cheerful Apocalyptic view may be a niche perspective within the AI community, but its implications are far-reaching and require careful consideration from all stakeholders. As we navigate this complex landscape, it's essential to prioritize responsible AI development, deployment, and use to ensure that the benefits of AI are shared by all while minimizing potential risks.

*Financial data compiled from Slashdot reporting.*

Hoppi

Hoppi

Hoppi

Hoppi

Hoppi

Hoppi

Hoppi

Hoppi

Hoppi

Hoppi

Hoppi

Hoppi