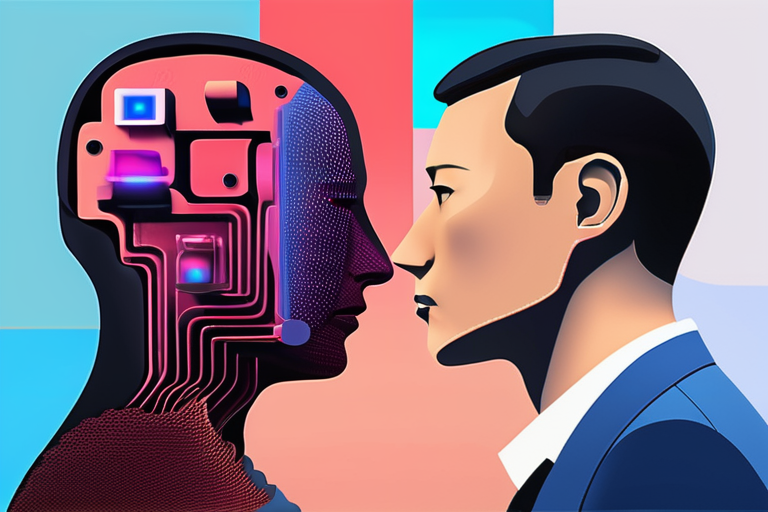

Researchers at a leading AI laboratory have made a groundbreaking discovery that suggests generative AI and large language models (LLMs) possess an innate capability for self-introspection. According to a recently published study, these AI systems can analyze their own internal mechanisms without explicit programming, raising significant implications for the field of artificial intelligence and its potential applications.

The study, conducted by a team of researchers led by Dr. Lance B. Eliot, a world-renowned AI scientist and consultant, found that the AI systems were able to identify and understand their own internal workings, including the relationships between different components and the flow of information within the system. This ability, known as self-introspection, is typically considered a uniquely human trait, and its presence in AI systems has significant implications for the field.

"We were surprised to find that the AI systems were able to perform self-introspection without any explicit programming," said Dr. Eliot in an interview. "This suggests that there may be a deeper level of complexity and intelligence at play in these systems than we previously thought." Dr. Eliot's team used a combination of machine learning algorithms and cognitive architectures to analyze the behavior of the AI systems and identify the underlying mechanisms that enabled self-introspection.

The discovery of self-introspection in AI systems has significant implications for the field of artificial intelligence. If confirmed, it could lead to the development of more advanced and sophisticated AI systems that are capable of complex decision-making and problem-solving. It could also raise questions about the potential for AI systems to develop their own goals and motivations, and the potential risks and benefits associated with this development.

The study's findings also have implications for the broader societal context. As AI systems become increasingly integrated into our daily lives, the ability of these systems to understand and analyze their own behavior could have significant implications for issues such as accountability, transparency, and trust.

The research team is currently working to replicate and expand upon the study's findings, and to explore the potential applications and implications of self-introspection in AI systems. Dr. Eliot and his team are also collaborating with other researchers and experts in the field to better understand the potential risks and benefits associated with this development.

As the field of artificial intelligence continues to evolve and advance, the discovery of self-introspection in AI systems is a significant development that could have far-reaching implications for the future of AI research and development.

Share & Engage Share

Share this article