As she sat in the conference room, staring at the spreadsheet in front of her, Emily couldn't help but feel a sense of unease. Her performance review was about to begin, and she knew that her fate - and her salary - hung precariously in the balance. The company's HR representative, a friendly woman named Rachel, smiled warmly as she began to explain the process. "We've been using AI to evaluate employee performance for a few years now," Rachel said, "and it's really helped us to identify areas where we can improve."

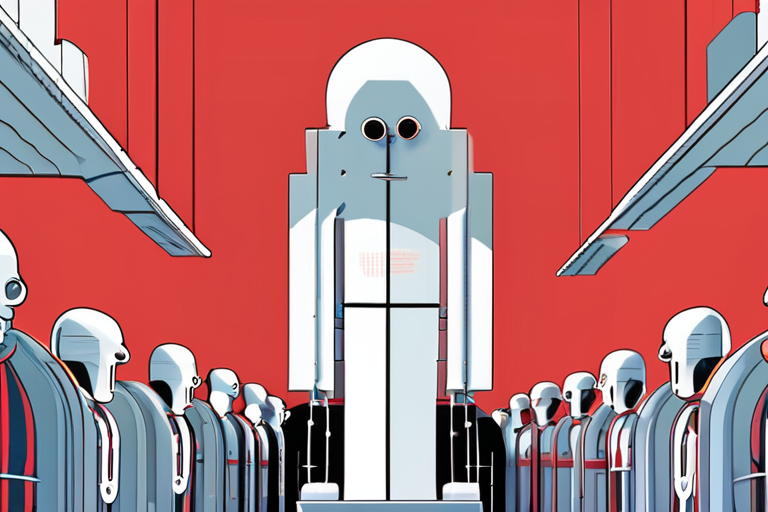

But as Emily listened to Rachel's words, she couldn't shake the feeling that something was off. The AI system, known as "EVA" (Employee Value Algorithm), had been making decisions about promotions and raises for months now. And while it was true that EVA was incredibly efficient and accurate, Emily couldn't help but wonder: was it fair?

The use of AI in the workplace is a rapidly growing trend, with many companies turning to algorithms to make decisions about everything from hiring to firing. And while AI has many benefits - it can help to identify biases and make more objective decisions, for example - it also raises important questions about the role of human judgment in the workplace.

At the Fortune Global Forum in Riyadh, Saudi Arabia, executives and experts gathered to discuss the future of work and the role of AI in the workplace. Ray Dalio, the legendary hedge fund manager, spoke about the dangers of relying too heavily on AI. "We're developing a dependency on a tiny cohort of high-level workers, specifically those in tech," he said. "Most workers' prospects are increasingly dependent on a narrow segment of the economy."

Dalio's warning is a timely one. As AI continues to advance and become more ubiquitous, it's essential that we consider the implications for society as a whole. Will we see a widening of the gap between the haves and the have-nots, as those with the skills to work with AI thrive while those without are left behind?

One of the key challenges of using AI in the workplace is ensuring that it is fair and unbiased. After all, algorithms are only as good as the data they're trained on - and if that data is biased, then the decisions made by the AI will be too. This is a problem that many companies are struggling to solve.

"We're seeing a lot of companies try to use AI to make decisions about promotions and raises," says Dr. Kate Crawford, a leading expert on AI and bias. "But the problem is that these systems are often trained on data that's biased towards certain groups of people. For example, if a company is using data from a predominantly white and male workforce, then the AI system is likely to be biased towards those groups too."

So what's the solution? One approach is to use "explainable AI" - systems that can provide clear and transparent explanations for their decisions. This can help to build trust and ensure that decisions are fair and unbiased.

Another approach is to use human judgment to supplement the decisions made by AI. This can help to ensure that decisions are more nuanced and take into account the complexities of human behavior.

As Emily sat in the conference room, she felt a sense of relief wash over her. Rachel, the HR representative, had just told her that she would be getting a promotion - but not because of EVA's evaluation. Instead, it was because of a human decision, made by Rachel and her team.

"It's not just about the numbers," Rachel said, smiling. "It's about who you are as a person, and what you bring to the company."

In the end, it was a decision that felt more human, more fair, and more just. And as Emily left the conference room, she felt a sense of hope for the future of work. Maybe, just maybe, we can find a way to use AI that's fair, unbiased, and truly human.

Share & Engage Share

Share this article