As she lay in bed, clutching her aching back, Sarah couldn't help but feel frustrated by the limitations of the medical system. Her doctor had prescribed her pain medication, but she knew that the dosage was a guess, based on her subjective reports of pain. It was a system that had been in place for decades, but one that was about to undergo a revolutionary transformation. Researchers around the world were racing to turn pain, medicine's most subjective vital sign, into something that could be measured as reliably as blood pressure.

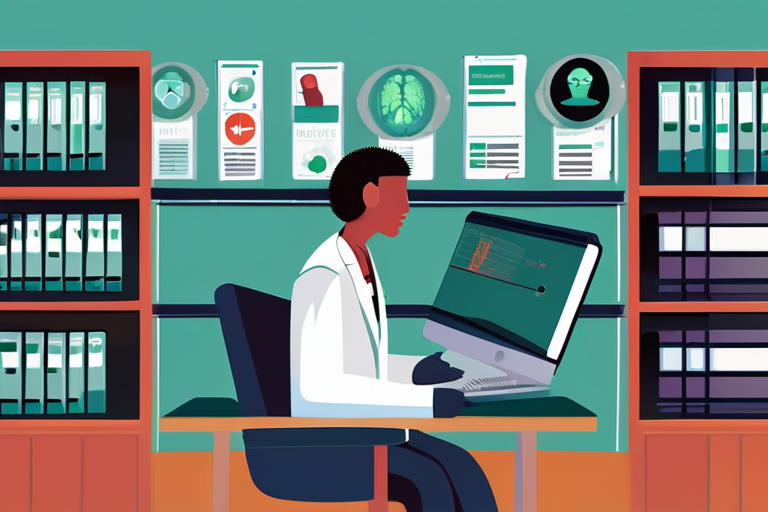

The push has already produced PainCheka, a smartphone app that scans people's faces for tiny muscle movements and uses artificial intelligence to output a pain score. This score has been cleared by regulators on three continents and has logged over 10 million pain assessments. Other startups are beginning to make similar inroads, using AI to analyze speech patterns, brain activity, and even social media posts to gauge a person's level of pain.

But as AI begins to measure our suffering, does that change the way we treat it? The answer is not a simple yes or no. On one hand, AI-powered pain assessment tools could revolutionize the way we approach pain management. No longer would patients have to rely on subjective reports, which can be influenced by a range of factors, including mood, expectation, and even social desirability bias. Instead, AI could provide a more objective measure of pain, allowing doctors to tailor treatment to the individual.

However, there are also concerns about the implications of relying on AI to measure pain. For one thing, AI systems are only as good as the data they're trained on, and if that data is biased or incomplete, the results could be skewed. Additionally, there's a risk that AI-powered pain assessment tools could perpetuate existing inequalities in healthcare, by reinforcing existing power dynamics and social norms.

Take the example of PainCheka, which uses facial recognition technology to assess pain. While the app has been cleared by regulators, some critics have raised concerns about its potential to perpetuate racial bias. If the AI system is trained on a dataset that's predominantly white, it may not be able to accurately assess pain in people of color, who may have different facial expressions or muscle movements.

These are just a few of the challenges that researchers and clinicians are grappling with as they work to integrate AI into pain assessment. But the potential benefits are significant, and could revolutionize the way we approach pain management.

Dr. David Patterson, a pain researcher at the University of California, San Francisco, is one of the leaders in this field. "The goal is to create a system that's not just accurate, but also fair and equitable," he says. "We want to make sure that AI-powered pain assessment tools are accessible to everyone, regardless of their background or socioeconomic status."

To achieve this goal, researchers are working to develop AI systems that are transparent, explainable, and fair. They're also exploring new ways to collect data, such as using wearable devices or social media posts, to get a more complete picture of a person's pain experience.

As AI continues to transform the way we assess pain, it's also raising important questions about the role of technology in healthcare. How do we ensure that AI systems are used in a way that's fair and equitable? And what are the implications of relying on technology to measure our suffering?

These are questions that will continue to be debated in the years to come, as AI-powered pain assessment tools become increasingly prevalent. But one thing is clear: the future of pain management is being rewritten, and it's up to us to ensure that it's a future that's fair, equitable, and just.

In the meantime, there are steps that individuals can take to help friends and family who may be struggling with conspiracy theories. The first step is to approach the conversation with empathy and understanding. "It's essential to acknowledge that people who believe in conspiracy theories often do so because they feel a sense of control or agency in a chaotic world," says Dr. Karen Douglas, a social psychologist at the University of Kent.

Once you've established a rapport with the person, it's essential to listen to their concerns and address them directly. Avoid dismissing their views or telling them that they're wrong, as this can reinforce their existing beliefs. Instead, focus on providing evidence-based information and encouraging critical thinking.

It's also essential to be aware of the tactics that conspiracy theorists often use to recruit new followers. These can include spreading misinformation, using emotional appeals, and creating a sense of urgency or crisis. By being aware of these tactics, you can better navigate the conversation and help the person to see things from a more nuanced perspective.

Ultimately, helping someone to escape a conspiracy theory black hole requires patience, empathy, and understanding. It's not a quick fix, but rather a process that requires time, effort, and dedication. But with the right approach, it's possible to help people to see the world in a different light and to develop a more critical and nuanced understanding of the world around them.

As we continue to navigate the complex and often confusing world of conspiracy theories, it's essential to remember that the goal is not to "win" an argument or to "convert" someone to our point of view. Rather, it's to help people to think critically and to make informed decisions about the world around them. By approaching the conversation with empathy and understanding, we can create a more just and equitable society, where people are free to think for themselves and to make their own decisions.

Share & Engage Share

Share this article