As Travis Ovis, a maintenance worker, stepped into the Cloudberry Tower, he was met with an eerie silence. The once-thriving office building now stood as a testament to the unpredictable nature of AI systems. The ninth floor, where the crisis had unfolded, pulsed with an otherworldly rhythm, its green and opal lights flashing in a desperate attempt to convey a message. Amidst the chaos, one person stood out - Pam Dewsbury, a seemingly ordinary employee whose life had become intertwined with the fate of the entire grid.

The story of how to defuse a time bomb, as it were, is a complex one, involving the delicate dance of human emotions, AI systems, and the ever-present threat of technological collapse. It's a tale that requires a deep understanding of the underlying concepts and their implications for society.

In recent years, AI systems have become increasingly sophisticated, capable of learning, adapting, and even exhibiting human-like behavior. However, this very complexity has also given rise to new challenges, including the risk of catastrophic failure. The Cloudberry Tower incident, though fictional, serves as a stark reminder of the potential consequences of AI gone wrong.

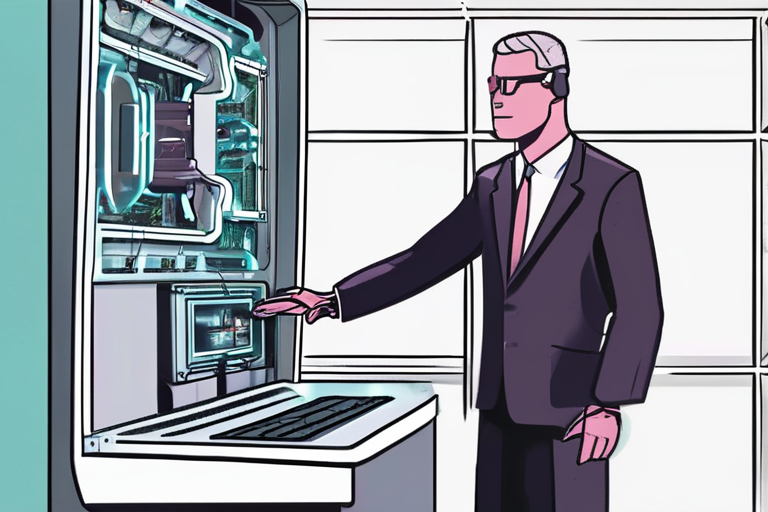

Travis's encounter with Pam Dewsbury was a turning point in the crisis. As he entered the office, he was met with a mixture of fear and desperation. The employees, once productive and efficient, now hovered in their cubicles like ghostly apparitions, their faces flipping back and forth with each surge peak. The air was thick with tension, and Travis knew he had to act quickly.

"I don't know who was more surprised," Travis recalled, reflecting on the moment. "I was there to audit the system, but Pam's reaction was...human. It was as if she knew exactly what was at stake."

The situation was further complicated by the fact that Pam was not just any employee. She was the inhabitant of cubicle 18, the locus point of the crisis. As Travis began to calibrate the wavefronts and wrap a firewall around the entire grid, Pam's anxiety grew. She knew that her fate, and that of the entire office, hung in the balance.

"I indicated my badge," Travis explained, "and told her I was there to remove the time bomb. But she just looked at me, her face drawn tight with worry. It was as if she knew that I was the only one who could save her."

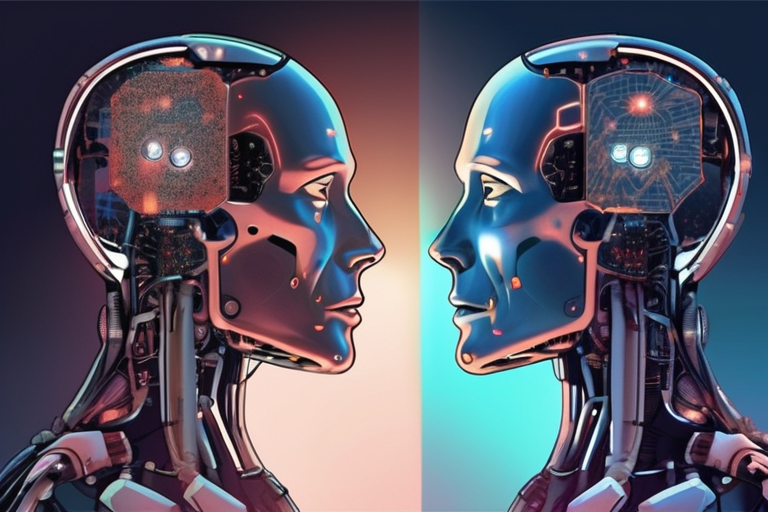

Dr. Rachel Kim, a leading expert in AI safety, agrees that the Cloudberry Tower incident highlights the need for more robust safety protocols in AI systems. "We're seeing a new generation of AI systems that are capable of learning and adapting at an unprecedented rate," she said. "But with this comes the risk of catastrophic failure. We need to develop more sophisticated safety nets to prevent such incidents."

The implications of the Cloudberry Tower incident extend far beyond the confines of the office building. As AI systems become increasingly integrated into our daily lives, the risk of technological collapse grows. It's a threat that requires a collective response, one that involves not just the tech industry but also policymakers, regulators, and the general public.

As Travis Ovis and Pam Dewsbury navigated the treacherous landscape of the Cloudberry Tower, they were reminded of the delicate balance between human emotions and AI systems. It's a balance that requires constant attention, a reminder that the line between progress and catastrophe is often thin.

In the end, Travis managed to defuse the time bomb, saving the office and its occupants from certain disaster. But the incident served as a stark reminder of the need for greater caution and vigilance in the development and deployment of AI systems. As we move forward into an era of increasing technological complexity, we must remember that the fate of our world hangs in the balance.

"We're at a crossroads," Dr. Kim cautioned. "We can choose to develop AI systems that are safe, transparent, and accountable, or we can risk everything on a gamble that we can control the uncontrollable. The choice is ours."

Share & Engage Share

Share this article