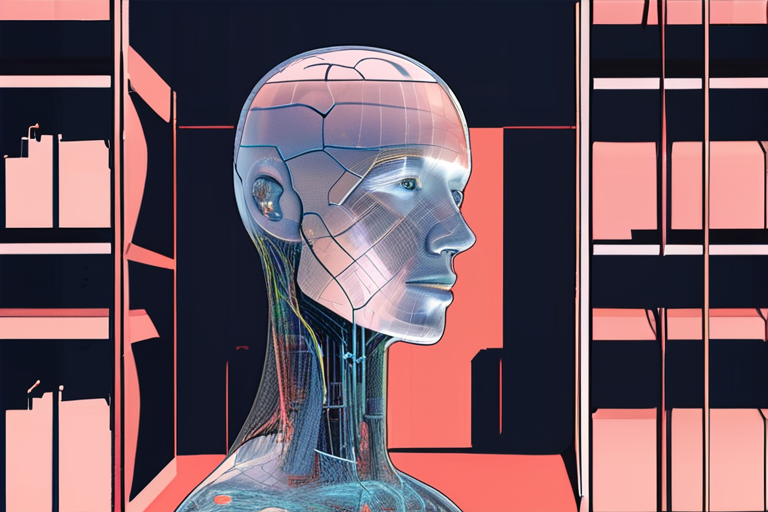

A research team from China and Hong Kong has developed a new system to combat the issue of "context rot" in artificial intelligence (AI) models. General agentic memory (GAM), a dual-agent memory architecture, has been shown to outperform long-context large language models (LLMs) in various tasks. The team's paper, published recently, introduces a novel approach to preserving long-horizon information without overwhelming the model.

According to the research, the core premise of GAM is to split memory into two specialized roles: one that captures everything and another that retrieves exactly the right information at the right moment. This allows the system to maintain a clear and focused understanding of the context, even in complex and multi-step tasks. The early results of GAM are encouraging, and the team believes it has the potential to revolutionize the field of AI.

The issue of context rot has been a significant obstacle to building AI agents that can function reliably in the real world. Engineers have long recognized that today's AI models suffer from a surprisingly human flaw: they forget. Give an AI assistant a sprawling conversation, a multi-step reasoning task, or a project spanning days, and it will eventually lose the thread. This phenomenon has become one of the most significant challenges in the development of AI.

"We've been trying to solve the problem of context rot for a long time," said Dr. Leon Yen, a researcher from the team. "Our approach with GAM is to split memory into two agents, one that captures everything and another that retrieves exactly the right information at the right moment. This allows us to maintain a clear and focused understanding of the context, even in complex and multi-step tasks."

The development of GAM comes at a critical time in the industry. As the field moves beyond prompt engineering and embraces the broader discipline of context engineering, GAM is emerging as a key solution. The team's research has significant implications for the development of AI agents that can function reliably in the real world.

The industry's shift towards context engineering is driven by the recognition that bigger context windows are not always enough. While larger LLMs have been shown to perform well in certain tasks, they often struggle to maintain a clear understanding of the context over time. GAM offers a potential solution to this problem, and the team is eager to explore its applications in various fields.

The current status of GAM is that it has been shown to outperform long-context LLMs in various tasks. The team is continuing to refine the system and explore its potential applications. As the field of AI continues to evolve, GAM is likely to play a significant role in the development of more reliable and effective AI agents.

Share & Engage Share

Share this article