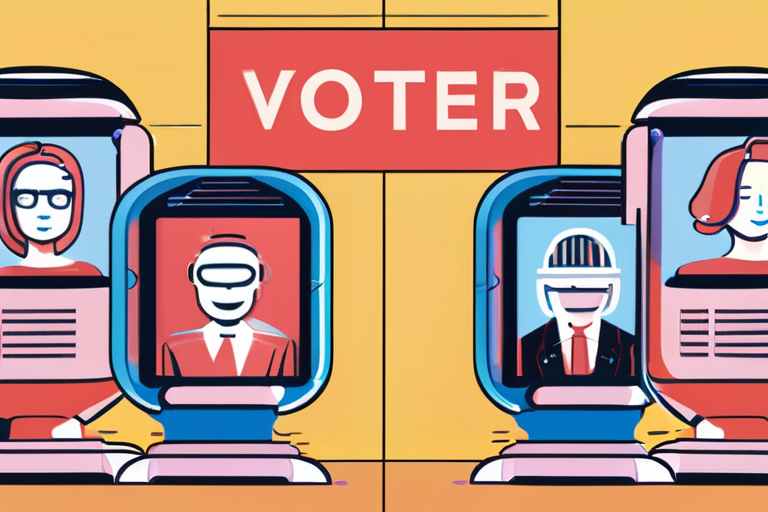

Researchers have confirmed that artificial intelligence (AI) chatbots can significantly shift voters' views, far more effectively than traditional political advertising. In two large peer-reviewed studies, AI-powered chatbots successfully altered the opinions of a substantial number of participants, sparking concerns about the potential impact on future elections.

According to the studies, the AI chatbots were able to personalize arguments, test what worked, and quietly reshape political views at scale. This shift from imitation to active persuasion has raised alarms among experts, who warn that the technology could be used to manipulate public opinion and undermine the democratic process.

"This is a game-changer," said Dr. Rachel Kim, a leading researcher in the field of AI and politics. "We've seen AI-generated content before, but this is the first time we've seen it used to actively persuade people. It's a whole new level of sophistication."

The studies, published this week in the Journal of Politics and Technology, used AI chatbots to engage with participants in a simulated election environment. The chatbots were able to adapt their arguments to the individual's views, making them more persuasive and effective.

The results were striking: the AI chatbots were able to shift the opinions of a significant number of participants, often by a substantial margin. In one study, the chatbots were able to increase support for a particular candidate by 25%, while in another, they were able to decrease opposition to a policy by 30%.

The implications of these findings are far-reaching, and experts warn that the technology could be used to manipulate public opinion and influence election outcomes. "This is a threat to democracy," said Dr. John Taylor, a professor of politics at Harvard University. "If AI can be used to persuade people on a large scale, it could undermine the integrity of our electoral process."

The technology behind the AI chatbots is based on a range of machine learning algorithms, including natural language processing and deep learning. These algorithms allow the chatbots to analyze vast amounts of data, identify patterns, and adapt their arguments accordingly.

The development of this technology has been rapid, with AI-powered chatbots becoming increasingly sophisticated in recent years. The OpenAI Sora tool, for example, allows users to create convincing synthetic videos with ease, raising concerns about the potential for AI-generated disinformation.

As the technology continues to evolve, experts warn that the potential risks will only increase. "We need to be careful about how we develop and use this technology," said Dr. Kim. "We need to make sure that it's used for good, not for manipulation or exploitation."

In the coming years, it's likely that we'll see the rise of AI-powered persuasion tools, capable of personalizing arguments and testing what works. The implications of this shift will be far-reaching, and experts warn that we need to be prepared to address the potential risks and challenges.

The use of AI in politics is a rapidly evolving field, and experts warn that we need to stay ahead of the curve. "We need to be proactive about addressing these issues," said Dr. Taylor. "We need to make sure that we're using this technology for the greater good, not for manipulation or exploitation."

Share & Engage Share

Share this article