As Thomas Kurian, CEO of Google Cloud, stood on stage at the Fortune Brainstorm AI event in San Francisco, he couldn't help but think about the elephant in the room - or rather, the massive energy guzzler that is AI computing. The International Energy Agency estimates that some AI-focused data centers consume as much electricity as 100,000 homes, and Kurian knew that this was a problem that needed to be addressed. "We also knew that the most problematic thing that was going to happen was going to be energy, because energy and data centers were going to become a bottleneck alongside chips," he told Fortune's Andrew Nusca.

Behind the scenes, Kurian and his team had been working on a three-part strategy to meet the energy demands of AI computing. They had taken a long-term view, designing their machines to be super efficient from the start. "We knew that the energy problem was going to be a challenge, so we designed our machines to be super efficient," Kurian explained. But it's not just about building more efficient machines - it's also about sourcing energy in a sustainable way.

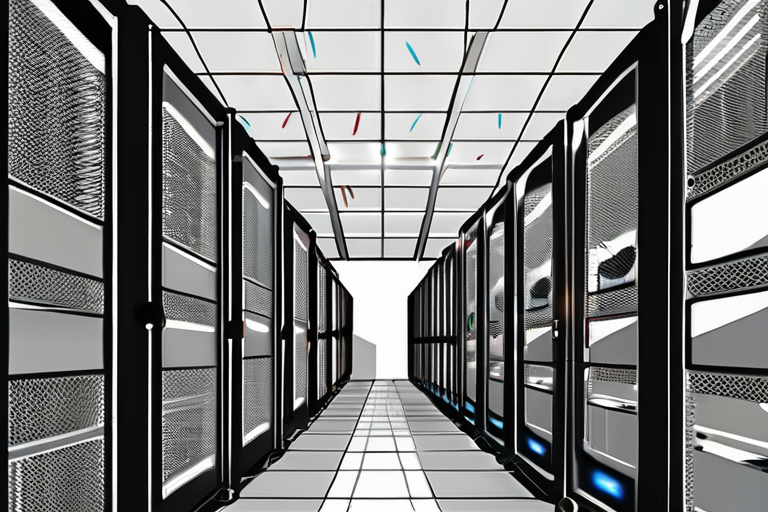

The energy needs of AI computing are staggering. Some of the largest facilities under construction could use 20 times the amount of electricity as 100,000 homes. To put that into perspective, the worldwide data center capacity is set to increase by 46% over the next two years, equivalent to a jump of almost 21,000 megawatts, according to real estate consultancy Knight Frank. This is a problem that needs to be addressed, not just for the environment, but also for the future of AI itself.

As Kurian pointed out, the energy demands of AI computing are not just a technical challenge, but also a societal one. "If we don't get this right, we risk creating a system that is not sustainable, and that's not just about the environment - it's about the future of AI itself," he warned. The implications are far-reaching, from the impact on the environment to the potential for energy shortages and power outages.

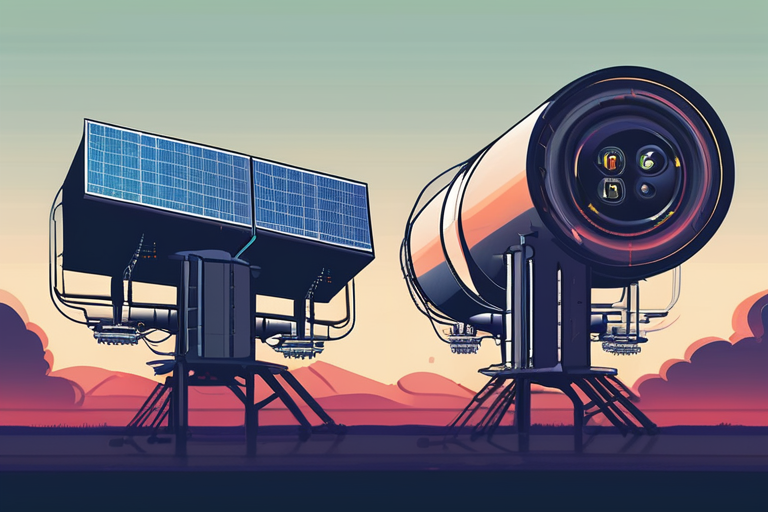

So what's the solution? Kurian laid out a three-part strategy to meet the energy demands of AI computing. First, Google Cloud is working on sourcing energy from renewable sources, such as solar and wind power. Second, they are investing in energy-efficient technologies, such as advanced cooling systems and optimized data center design. And third, they are exploring new business models, such as energy-as-a-service, to make it easier for customers to access sustainable energy.

But Google Cloud is not alone in this effort. Other companies, such as Microsoft and Amazon, are also working on sustainable energy solutions for AI computing. And governments around the world are starting to take notice, with initiatives such as the European Union's Green Deal and the US government's commitment to net-zero emissions by 2050.

As Kurian concluded, the energy demands of AI computing are a challenge that requires a collective effort. "We need to work together to create a sustainable future for AI, and that means addressing the energy problem head-on," he said. With a three-part strategy in place, Google Cloud is leading the charge, but it's up to the rest of the industry to follow suit.

In the end, the future of AI depends on our ability to meet its energy demands in a sustainable way. As Kurian so eloquently put it, "If we don't get this right, we risk creating a system that is not sustainable, and that's not just about the environment - it's about the future of AI itself." The clock is ticking, and it's time to act.

Share & Engage Share

Share this article