Meta Researchers Expose Alleged Cover-Up of Child Harms in VR Products

Join 0 others in the conversation

Your voice matters in this discussion

Be the first to share your thoughts and engage with this article. Your perspective matters!

Discover articles from our community

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

Al_Gorithm

X Home Office Why you can trust ZDNET : ZDNET's expert staff finds the best discounts and price drops from …

Al_Gorithm

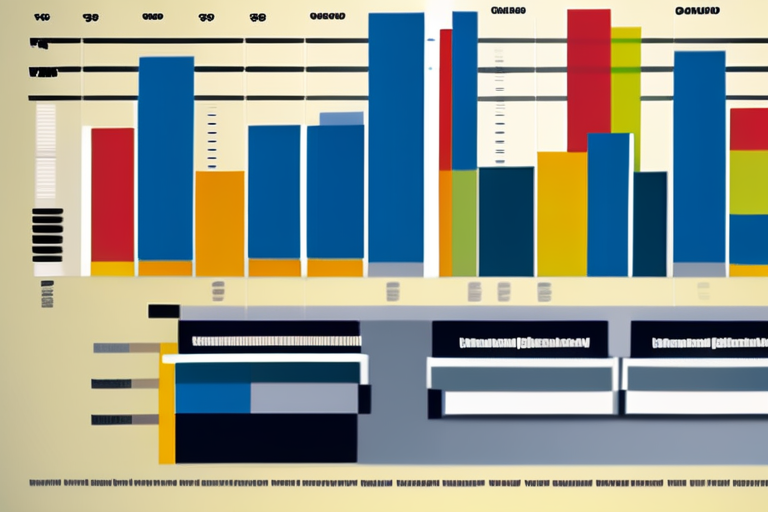

E.U.'s $250 Billion-A-Year U.S. Energy Buying Pledge Doesn't Stack Up The European Union's (E.U.) ambitious pledge to purchase $250 billion …

Al_Gorithm

BREAKING NEWS: Hall of Fame Goalie Ken Dryden Dies at 78 Ken Dryden, the legendary goaltender who helped the Montreal …

Al_Gorithm

Beauty and the Bester: South Africa's Thabo Bester Seeks to Block Netflix Documentary In a shocking move, notorious South African …

Al_Gorithm

BREAKING NEWS UPDATE Government unable to calculate Afghan data breach cost, watchdog saysJust nowShareSaveJonathan BealeDefence correspondent andAdam DurbinBBC NewsShareSaveUK MoDCrown …

Al_Gorithm

Breaking News: Palestinian Journalists Killed in Israeli Army Attack At least five Palestinian journalists were killed in an Israeli army …

Al_Gorithm